Highlights

|

References

|

The datasets, alignment and saliency models, and related experiments are described in:

The license agreement for data usage implies the citation of the two papers above. Please notice that citing the dataset URL instead of the publications would not be compliant with this license agreement. |

Hollywood-2

|

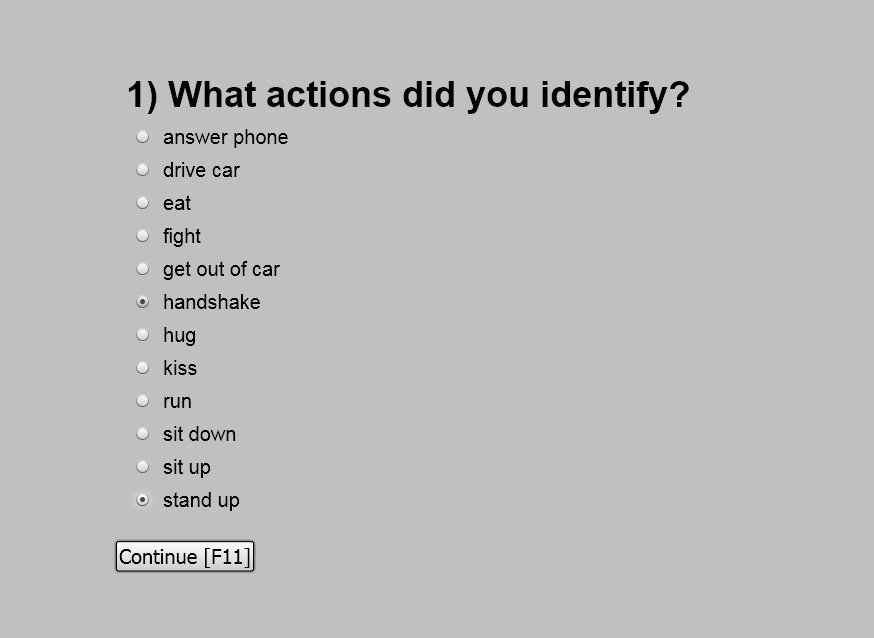

This dataset is one of the largest and most challenging available for real world actions. It contains 12 classes: answering phone, driving car, eating, fighting, getting out of car, shaking hands, hugging, kissing, running, sitting down, sitting up and standing up. These actions are collected from a set of 69 Hollywood movies. It consists of about 487k frames totalling about 20 hours of video and is split into a training set of 823 sequences and a test of 884 sequences. There is no overlap between the 33 movies in the training set and the 36 movies in the test set. |

UCF Sports

|

This high resolution dataset was collected mostly from broadcast television channels. It contains 150 videos covering 9 sports action classes: diving, golf-swinging, kicking, lifting, horseback riding, running, skateboarding, swinging and walking. Unlike Hollywood-2, there are no separate training and test sets, and evaluation of action recognition learning algorithms is typically carried out by leave-one-out cross-validation. |

Eyetracking Setup and Geometry

|

Eye movements were recorded using an SMI iView X HiSpeed 1250 tower-mounted eye tracker, with a sampling frequency of 500Hz. The head of the subject was placed on a chin-rest located at 60cm from the display. Viewing conditions were binocular and gaze data was collected from the dominant eye of the participant. The LCD display had a resolution 1280 x 1024 pixels, with a physical screen size of 47.5 x 29.5cm. Because the resolution varies across the datasets, each video was rescaled to fit the screen, preserving the original aspect ratio. The visual angles subtended by the stimuli were 38.4 degrees in the horizontal plane and ranged from 13.81 to 26.18 degrees in the vertical plane. |

|

|

Calibration and Validation Procedures

|

The calibration procedure was carried out at the beginning of each block. The subject had to follow a target that was placed sequentially at 13 locations evenly distributed across the screen. Accuracy of the calibration was then validated at 4 of these calibrated locations. If the error in the estimated position was greater than 0.75 degrees of visual angle, the experiment was stopped and calibration restarted. At the end of each block, validation was carried out again, to account for fluctuations in the recording environment. If the validation error exceeded 0.75 degrees of visual angle, the data acquired during the block was deemed noisy and discarded from further analysis. Following this procedure, 1.71% of the data had to be discarded. |

|

|

Subjects

|

We have collected data from 16 human volunteers (9 male and 7 female) aged between 21 and 41. We split them into an active group, which had to solve an action recognition task, and a free-viewing group, which was not required to solve any specific task while being presented the videos in the two datasets. There were 12 active subjects (7 male and 5 female) and 4 free viewing subjects (2 male and 2 female). None of the free viewers was aware of the task of the active group and none was a cognitive scientist. |

Recording Protocol

|

Before each video sequence was shown, participants in the active group were required to fixate the center of the screen. Display would proceed automatically using the trigger area-of-interest feature provided by the iView X software. |

|

Participants in the active group had to identify the actions in each video sequence. Their multiple choice actions were recorded through a set of check-boxes displayed at the end of each video, which the subject manipulated using a mouse. Participants in the free viewing group underwent a similar protocol, the only difference being that the questionnaire step was skipped. |

|

|

Data

Use the links below to download our gaze data:

Code

Use the link below to download binaries for our HoG-MBH fixation detector:

Please refer to the included readme file for additional information.

Acknowledgements

|

This work was supported by a grant of the Romanian National Authority for Scientific Research, CNCS - UEFISCDI, under PNII-RU-RC-2/2009. |